Adobe’s core focus at their MAX keynote last year was to give its creative apps a broader feature suite underlined by artificial intelligence (AI) foundations led by its in-house Firefly AI. At the core of that was the Firefly Video Model, which sparked a battle of video generation supremacy with Google, OpenAI and others jumping into the ring. At this year’s keynote, Adobe has begun writing the next chapter in that journal, which includes adding a broader spectrum of models within their apps, as well as new tools that draw utility from generative AI in professional workflows. There is a sense that Adobe understands Firefly AI’s strengths, and is relying on partner models to give users more choice, specific expertise, and often different tonality to generations.

These include updated Firefly models including custom models, as well as more models from other AI companies, for users to create with. As Alexandru Costin, who is vice president of Generative AI and Sensei at Adobe, says, “We launched Adobe Firefly three years ago as the place for ideating, creating and and finishing the production process for digital content. Across all the core modalities that our customers care about from images, designs, templates, video, audio, and we’re doing it not only with Adobe’s models but with the world’s top AI models.”

New Firefly models and layered editing flexibility

The new Firefly Image Model 5 is set to be added to the Firefly platform as well as Adobe’s creative apps, and key highlights of this model includes native 4-megapixel resolution and the promise of photorealistic quality that extends to being able to render groups of people, and prompt based editing. There are improvements with how light is handled in generations. “It is about increasing the quality of the generation, and also making the model more useable and useful for production workflows,” explains Costin.

Adobe is also adding layered image editing capabilities to Firefly — the key functionalities for this would be the ability to composite or replace elements, edit light layers and do light manipulation, as well as control the position of objects in a visual. Any edits done using layered image editing can be carried into Adobe Photoshop as well. “The innovation we’re showing here is mixing the best of generative AI with the best of Adobe technology and reinventing some of these basic capabilities, with those two worlds coming together as one,” adds Costin.

Partner models, a wholesome generative menu

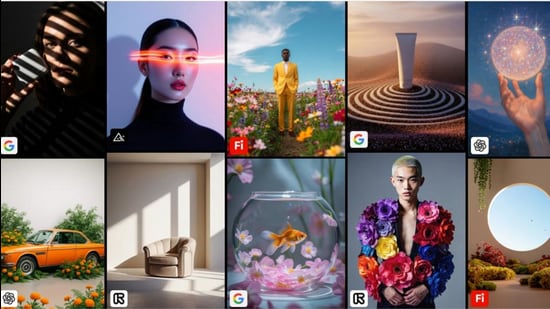

The addition of popular models from other AI companies, is key to Adobe’s vision of making Firefly a powerful piece in its already capable jigsaw of creative apps. It was earlier in the year, when the company announced the first steps in that direction, with OpenAI’s GPT image, Google’s Imagen as well as Veo 2 and Black Forest Labs’ Flux 1.1 models being added to the Firefly app. Now, Adobe is adding Topaz Labs’ Topaz Bloom (image), Topaz Gigapixel (Image) and ElevenLabs Multilingual v2 audio generation model.

At this time, users of the Firefly app or Firefly on the web have a choice of a number of models, choices including Google’s newest Gemini 2.5 (Nano Banana) and Flux Context Pro for image generation, as well as Google Veo 3 Fast, Pika 2.2, and Runway Gen-4 for videos. For now, the partner models have only one audio generation option (the key use case is expected to be voice-over generation), the aforementioned ElevenLabs Multilingual v2. This should give users the choice and variety of styles.

Fine-tuning a custom Firefly

Adobe is betting big on Firefly custom models. For context, these are models that are trained specifically on a particular set of data to achieve results that are tuned for a focused workflow; this could be a set of brand visuals and images to build more content matching that. Till now, businesses and enterprises could take advantage of Firefly custom models. Now, Adobe is unlocking access for all creators, who will be able to set up and use these models in the Firefly app or Firefly Boards. It is expected that Adobe will extend the same security and governance policies for custom models for creators, as they did for enterprise users, including a by default opt-out of using the custom data to train the foundational Firefly generative AI models.

Tools to draw from the model array

There is a sense that Adobe isn’t simply adding partner AI models to Firefly purely for the sake of optics. In fact, the company is adding a number of new tools that will be useful for creators. The Generate Soundtrack option, for instance, though still in the beta test stage, will be able to generate what Adobe claims will be studio quality tracks that are also fully licensed.

“We’re also introducing our generative speech tool using both Adobe’s own text to speech model, and integrating ElevenLabs’ model with more than ten voices too. So, you have a large catalogue of voices, and can emphasise portions of your talk track,” explains Costin. The Generate Speech tool is also in beta, for the time being.

The Firefly Video Editor, which is in a beta wait list at the time of launch, will be helpful in generating, organising, trimming and sequencing clips. This, as part of Firefly, will sit alongside Adobe’s Premiere video editing apps, including the mobile app. Adobe is also adding new capabilities to Firefly Boards, which went global earlier last month, where teams of designers can collaborate on creations; new additions include 2D to 3D generation support.